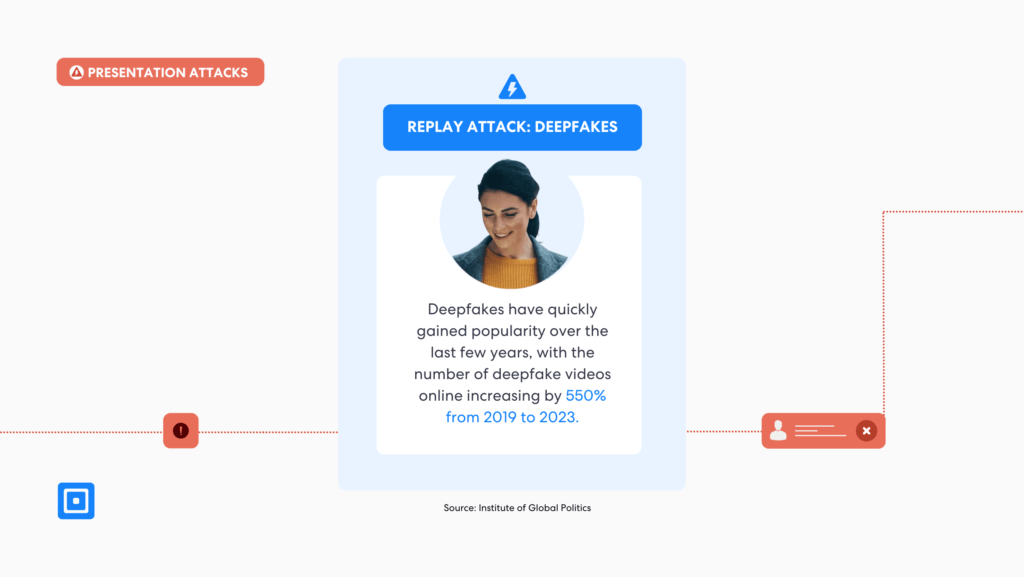

The rise of deepfake fraud has introduced a new dimension of risk for businesses, eroding the foundations of digital trust. These AI-generated manipulations – capable of mimicking speech, facial expressions, and gestures with startling accuracy – are no longer confined to skilled experts. Open-source tools have democratised deepfake creation, allowing even those with minimal technical expertise to produce convincing fake videos and fake images, amplifying their threat across social media platforms. For businesses, the consequences of deepfake attacks are far-reaching, from financial fraud and data breaches to reputational damage and loss of stakeholder trust. As these threats evolve, deepfake detection tools have become indispensable. This guide examines how such tools help businesses protect operations, secure stakeholder trust, conduct comprehensive assessments, and stay resilient against emerging threats.

What are Deepfakes?

Deepfakes are artificially created or altered videos, images, or audio recordings designed to look and sound completely real. They’re generated using advanced artificial intelligence (AI) techniques, allowing fraudsters to manipulate a person’s appearance, voice, or even behavior in digital content. Unlike simple AI-generated and innocently manipulated images, deepfakes can create entirely new identities and falsify real world scenarios by replacing one person’s face or voice with another’s.

What makes deepfakes especially dangerous is their connection to synthetic identity fraud. In these schemes, fraudsters blend real and fake information – such as combining a real social security number with fabricated personal details or fake name – to create a false identity. This synthetic profile can then be used to bypass security checks, open deceitful accounts, or carry out financial crimes, making deepfake detection extremely challenging without specialized tools.

46% of organisations globally faced synthetic identity fraud in 2022.

As many as 46% of organizations globally faced synthetic identity fraud in 2022, and that number continues to grow, costing companies exorbitant amounts of money and compromising sensitive information from unsuspecting victims.

From fraudulent financial transactions to reputational sabotage, deepfakes have evolved into a powerful tool for malicious bad actors. As noted by the Northwestern Buffett Institute for Global Affairs, “In a world rife with misinformation and mistrust, AI provides ever-more sophisticated means of convincing people of the veracity of false information that has the potential to lead to greater political tension, violence, or even war.” This sobering reality reveals not just the new threat of the technical threat posed by deepfakes, but their broader societal impact.

Deepfake Manipulation on Social Media Platforms

The hyper-realistic quality of deepfakes often deceive viewers and slip past traditional detection methods. As these manipulations grow more convincing, advanced technologies are necessary for identifying the subtle markers of deepfake videos and content.

The Employment of AI and Machine Learning in Deepfake Creation

At their core, deepfakes are created using advanced and deep machine learning techniques, primarily Generative Adversarial Networks (GANs) and neural networks. These systems are designed to replicate and manipulate human features, expressions, and speech with remarkable precision. To simplify, a GAN operates like an ongoing competition between two artificial intelligence (AI) systems: the generator and the discriminator.

The generator acts like a highly skilled artist, using machine learning methods to create fake images, videos, or audio. It does this by analyzing massive datasets filled with examples of real-world facial features, voice patterns, and lip movements. For instance, it studies how mouths move when certain words are spoken, how skin texture appears under different lighting conditions, and how facial muscles shift during expressions. Over time, the generator learns to mimic these details with increasing accuracy.

The discriminator, on the other hand, serves as a meticulous critic. It evaluates the content created by the generator, analyzing every detail—down to subtle inconsistencies in lip movements, skin tone transitions, or eye reflections—to determine if the output is authentic or synthetic. The discriminator’s job is to flag anything that seems out of place.

The generator keeps refining its work based on the feedback it gets from the discriminator. Over time, this back-and-forth process improves the generator’s ability to produce content so convincing that even the discriminator struggles to tell if it’s fake content. This ongoing cycle is what makes deepfakes increasingly realistic and hard to detect without specialized tools.

Face Swapping Techniques and Their Implications for Deepfake Detection

One of the most recognizable uses of deepfake technology is face swapping, where a person’s face is superimposed onto another’s in video content. These manipulations can make it appear as though someone has said or done things they never actually did, making them a powerful tool for disinformation campaigns. However, the implications extend far beyond disinformation.

This new technology has also given rise to deepfake phishing, a particularly insidious form of cybercrime. According to Stu Sjouwerman, an industry expert and Forbes contributor on internet security, “Phishers are known to evolve their tactics in line with the evolution of technology. In recent years, phishing has shape shifted again, using a technology that some experts call the most dangerous form of AI-fuelled cybercrime in the world.”

Deepfakes are one of the most serious emerging risks.

This assessment is supported by a study conducted by University College London (UCL), where 31 experts ranked the most significant threats posed by AI-driven crime. The research, funded by UCL’s Dawes Centre for Future Crime, highlighted deepfakes as one of the most serious emerging risks. Among the 20 nefarious uses of AI identified, deepfakes stood out for their potential to cause widespread harm over the next 15 years.

The Accessibility of Deepfake Detection Technology and Its Risks

The proliferation of open-source deepfake creation tools has made this technology accessible to a broader audience. Even individuals with minimal technical expertise can now produce deepfakes using software such as DeepFaceLab, a system which powers over 95% of deepfake creations. This accessibility means that deepfake threats are no longer confined to highly skilled developers or well-funded cybercriminal organizations, but they’re now a widespread cyber threat that can emerge from virtually anyone with a computer and an internet connection.

Gartner has commented on the risks deepfakes are presenting for businesses globally, stating, “In the past decade, several inflection points in fields of AI have occurred that allow for the creation of synthetic images. These artificially generated images of real people’s faces, known as deepfakes, can be used by malicious actors to undermine biometric authentication or render it inefficient,” said Akif Khan, VP Analyst at Gartner. “As a result, organizations may begin to question the reliability of identity verification and authentication solutions, as they will not be able to tell whether the face of the person being verified is a live person or a deepfake.”

Viral Spread of Deepfakes: Social Media’s Role

Once created, deepfakes can spread rapidly on various social media platforms, leveraging emotionally charged or controversial narratives to gain traction. This virality exacerbates the challenge of mitigating their impact, as they can influence public perception before detection.

Financial Fraud and Deepfake-Enabled Schemes

Deepfakes are increasingly being weaponized, creating significant risks for businesses. Fraudsters have used deepfake audio and video to impersonate executives, deceiving employees into transferring funds or divulging sensitive information.

One notable case occurred in Hong Kong, where a finance worker at a multinational firm was tricked into transferring $25 million. According to Hong Kong police, the attackers used deepfake technology to convincingly impersonate the company’s CEO during a video conference call.

Manipulated media can severely damage a company’s brand image. A deepfake video portraying a CEO in a compromising situation, for instance, could lead to public backlash and erode trust among stakeholders.

Challenges and Future Directions in Combating Deepfakes

Unfortunately, many subpar detection algorithms face challenges such as high false-positive rates and the evolving sophistication of deepfake creation technologies. Continuous updates and collaboration between businesses and tech developers are essential to staying ahead of these threats.

To detect deepfakes in real time, acquiring and analysing a large and unbiased dataset is necessary.

As noted in the study ‘Deepfake Video Detection: Challenges and Opportunities’ (Kaur et al., 2024),“Despite the significant progress in deepfake video and detection algorithms, several crucial challenges remain unsolved for present deepfake video detection methods. To detect deepfakes in real time, acquiring and analysing a large and unbiased dataset is necessary. Collecting real-time data is one of the Deep Learning (DL)-based method’s primary limitations. Unfortunately, many real-time application areas cannot access large amounts of new data.”

This shows a key issue with most deepfake detection systems: tools are only as effective as the datasets they’re trained on. Without access to diverse, up-to-date data, even advanced AI detection models struggle to keep pace with increasingly sophisticated deepfakes. Businesses must not only invest in reliable deepfake detection technologies but also support better data-sharing practices and collaboration across industries to address this gap.

Deepfake Detection Tools for Safeguarding Digital Trust

To address the challenge of manipulation from deepfakes, businesses need to adopt advanced deepfake detection tools and software. These systems rely on a combination of deep learning techniques and machine learning algorithms to meticulously analyze digital media (including images, video, and audio) for signs of generated or manipulated content.

95% of all standard customer onboarding processes fail to detect the presence of fake identification.

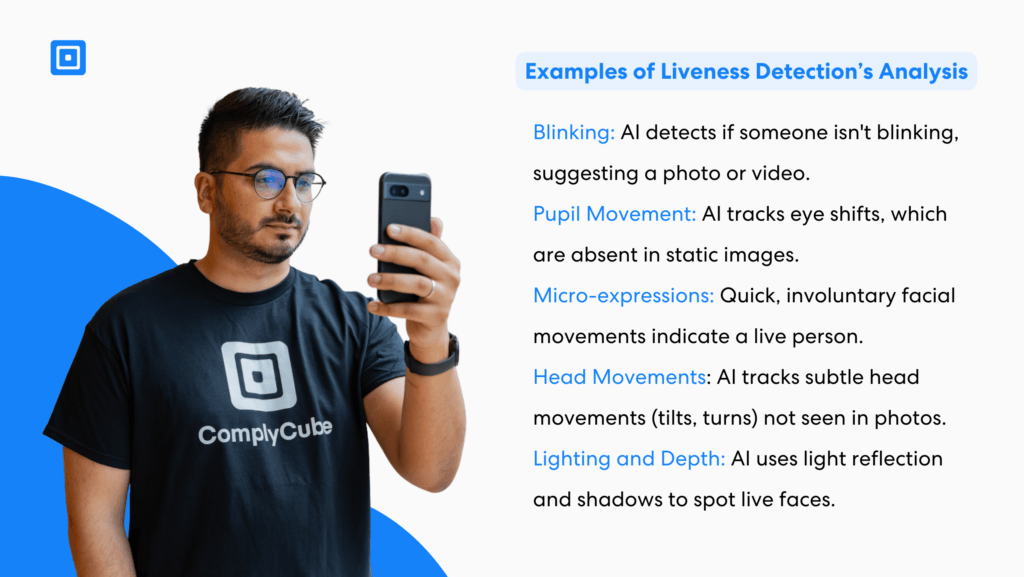

One innovative deepfake detection platform in this space is ComplyCube. The platform’s liveness detection technology sets a new benchmark in biometric verification, verifying that biometric data originates from a real, live person rather than static images, pre-recorded videos, or AI-generated deepfakes.

Powered by advanced AI and machine learning algorithms, it analyzes micro-expressions, skin texture, facial recognition patterns, and environmental interactions in real-time. Through a multi-modal approach—combining active checks, like guided head movements, with passive analysis that operates silently in the background—ComplyCube seamlessly detects sophisticated spoofing attempts, including printed photos, 3D masks, and video replays. This robust system delivers highly accurate, secure, and frictionless identity verification, empowering businesses to confidently prevent fraud.

Building Resilience Against Deepfake Threats with Deepfake Detection Tools

Deepfakes represent a formidable challenge to digital trust, particularly for businesses striving to maintain secure and reliable operations. By adopting advanced deepfake detection tools and integrating them into comprehensive cybersecurity frameworks, organizations can mitigate the risks posed by manipulated media.

ComplyCube is a powerful platform designed to address the growing deepfake detection challenge by leveraging advanced machine learning techniques and deep learning technologies. By combining face recognition, document analysis, and anti-spoofing measures, the platform ensures secure and reliable identity verification, even in an era where detecting deepfake videos is becoming increasingly difficult.

Robust Face Recognition and Similarity Analysis: ComplyCube utilizes ISO 30107-3 and PAD Level 2-certified biometric liveness detection to ensure accurate face recognition. By comparing real videos and biometric data samples, the tool effectively distinguishes genuine users from manipulated identities. Its deepfake detection capabilities are enhanced by analyzing facial features, behavioral patterns, and other unique biological signals, creating an additional layer of verification assurance.

Advanced Document Verification: Using a blend of advanced machine learning techniques and human expertise, ComplyCube analyzes a wide range of ID documents—such as passports, driver’s licenses, national ID cards, residence permits, and visa stamps. This verification process involves cross-referencing documents with trusted data sets to identify signs of tampering, forgery, or blacklisting, ensuring they are authentic and unaltered.

Cutting-Edge Liveness Detection Technology: With AI-powered PAD-Level 2 liveness detection, ComplyCube can detect deepfake videos and prevent impersonation attempts. This system uses anti-spoofing algorithms to identify subtle discrepancies between real videos and manipulated media, safeguarding against increasingly sophisticated deepfake detection challenges.

Seamless Biometric Onboarding: ComplyCube offers a guided face capture experience, streamlining the biometric onboarding process for industries such as finance, telecommunications, travel, and enterprise services. By focusing on high-quality data set integration and continuous system optimization, the tool delivers a smooth and accurate authentication process.

In the face of rising threats from AI-generated fake content, a partner like ComplyCube plays an essential role in strengthening digital trust. Its comprehensive approach to face recognition, document verification, and deepfake detection helps organizations stay ahead of challenges while ensuring the integrity of their identity verification processes.

Contact one of ComplyCube’s compliance experts today for more information on how to safeguard your organisation.