TL;DR: Deepfakes have evolved from AI novelties into serious threats to global stability, enabling fraud and misinformation at scale. Their impact on elections, public trust, and business security is growing rapidly, demanding urgent attention. This guide explores how deepfake detection software and Identity Verification or IDV solutions such as biometric verification and document verification can help mitigate risks and protect digital integrity.

What is a Deepfake?

Deepfakes get their name from an Artificial Intelligence (AI) technique known as deep learning. Specifically, deep learning algorithms can teach themselves to solve extremely complex problems. By analyzing vast datasets, they can then generate hyper-realistic content, seamlessly swapping faces into images, videos, audio and more.

The world is quickly heading into a deepfake crisis. At the same time, concerns continue to grow over how this AI technology will affect organizations. In fact, it is already reshaping the global socio-economic and political landscape. Most recently, however, fears about election integrity have intensified and attracted significant scrutiny.

What is Deepfake Detection?

Deepfake detection software identifies images, sounds, or videos that have been artificially created to look hyper-realistic. Today, companies integrate IDV solutions such as document verification and biometric verification processes to detect patterns that would not exist in ‘real content’.

AI-powered identity verification are quickly becoming the only way to reliably counter deepfake technology and mitigate this type of identity fraud. Comparably to a human, these technologies can identify spoofed content far more effectively, being able to process far more data over a given period of time, far more accurately, and far more cost-effectively.

In September 2023, 3 key law enforcement agencies, the National Security Agency (NSA), Federal Bureau of Investigation (FBI), and the Cybersecurity and Infrastructure Security Agency (CISA), released a paper declaring that deepfake threats had increased exponentially and automated preventative technologies such as deepfake detection software were essential.

Threats from synthetic media, such as deepfakes, have exponentially increased.

As deepfake threats grow across sectors, the need for identity verification, becomes more urgent. IDV solutions that combine document verification, biometric checks, and liveness detection now represent a critical defense against AI-driven fraud.

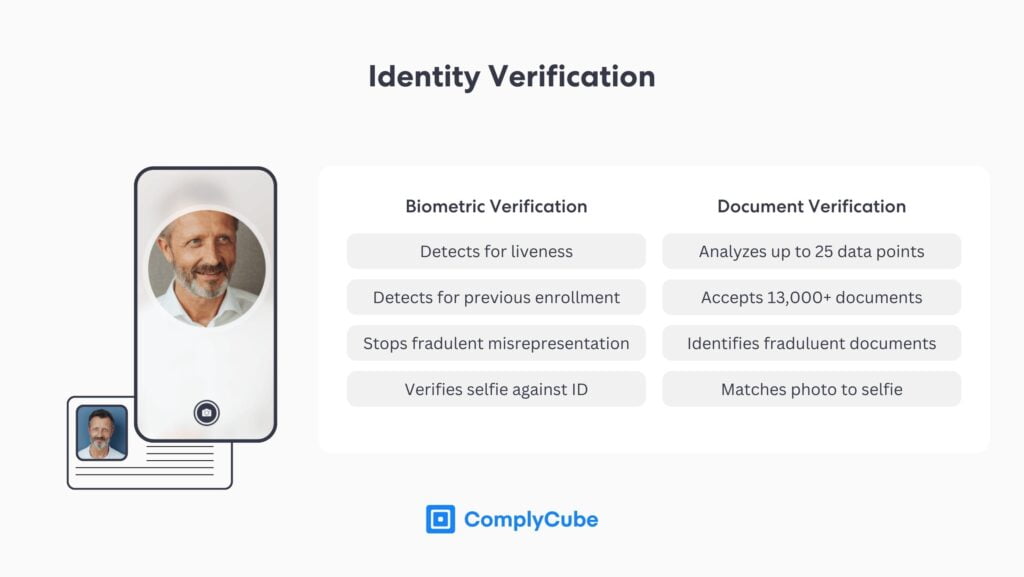

Document Verification

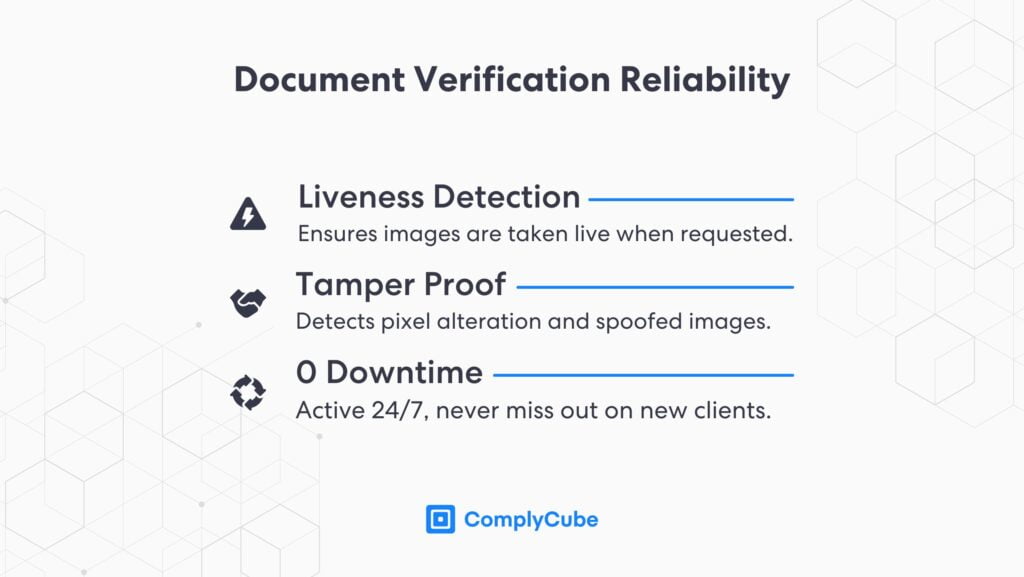

Document verification is one of the two key steps in Anti-Money Laundering (AML), Know Your Customer (KYC), and IDV processes. It uses an AI-powered verification engine to read KYC documents, such as a driver’s license, in under 15 seconds.In fact, many deepfake fraud attempts are first detected through anomalies found during document verification, make it a critical component of any deepfake detection software technology.

Simultaneously verifying document authenticity and extracting the available data, document verification provides a strong level of identity assurance. It also acts as a preliminary deepfake detection software, being able to identify artificially created images of ID cards. For more information on Gen AI, document verification, and deepfake detection methods, read Generative AI Fraud and Identity Verification.

Biometric Verification

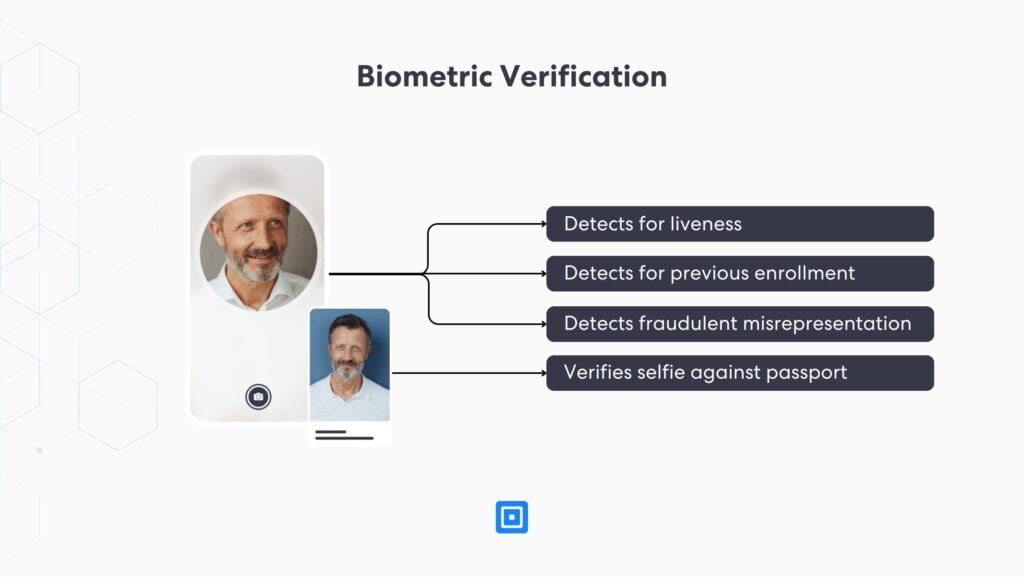

Biometric verification uses powerful biometric identification and facial recognition technologies to scan, verify, and authenticate user biometrics. Analyzing biometric information, such as facial features, micro-expressions, skin tones, and texture (sometimes using alternative data such as iris scans), this process acts as a liveness detection tool and prevents presentation attacks, such as deepfakes.

Biometric authentication remains one of the most reliable ways to verify identity. While biometric data is extremely difficult to forge, even with AI, detecting deepfakes is still far from simple.

Automated IDV solutions play a critical role in modern identity verification, client onboarding, and authentication. They enable organisations to handle large volumes of checks accurately and at scale. For more information, read The Advantages of Biometric Verification.

Deepfakes and Election Integrity

Malicious actors often use deepfakes to undermine elections. They can spread false information and manipulate public opinion. The Alan Turing Institute found that nearly nine out of ten people worry about deepfakes influencing election outcomes.

This concern reflects real risks. Bad actors have already released high-profile deepakfes of political figures. This includes fabricated audio and video clips with the aim of confusing voters.

Ahead of the 2024 UK General Election, unknown actors created deepfakes mimicking the voices of Prime Minister Rishi Sunak, Labour leader Keir Starmer, and London Mayor Sadiq Khan. They distributed these clips across social media, reaching hundred of thousands of potential voters and fueling public misconceptions.

These manipulations included fake corruption scandals and misleading claims about political positions and intentions. As a result, such content can cause serious harm, particularly when voters struggle to tell real from fake.

Deepfake Detection and Response

Deepfake detection is becoming increasingly difficult, even for tech giants like Meta, Google, and Microsoft, all of which have pledged to combat deceptive AI in elections. The challenge stems from the growing sophistication of generative AI tools, which now produce nearly indistinguishable from reality. Companies must integrate IDV solutions and real-time detection APIs to stop threats before they go live.

For instance, Meta’s President of Global Affairs, Nick Clegg, has noted the challenges in identifying AI-generated content, emphasizing that malicious actors can strip away invisible markers that usually indicate manipulation.

The threat of deepfakes is global. It even appears to follow international events. In America, deepfakes mimicking President Joe Biden’s voice were used in a robocall to share false information about the election. This incident highlights the potential of deepfakes to suppress voter turnout.

This challenge goes beyond detection. Once a deepfake spreads, it often causes harm before fact-checkers can debunk it. Platforms must act before fraudulent content goes live. Socials networks such as X (formerly Twitter), Facebook, and Instagram should adopt deepfake detection software with pre-upload scanning capabilities and real-time liveness detection via SDKs and APIs.For more information, read Integrating with a Liveness Detection SDK.

Deepfake Detection in Identity Verification

Beyond social media, deepfakes have become a major fraud vector across industries. Today, they represent a key fraud risk across financial serviceshey’ve become a major fraud vector across financial sectors, from banking and trading apps to crypto platforms and payment services. Criminals use deepfakes daily to bypass IDV, drain funds and open accounts with credit providers.

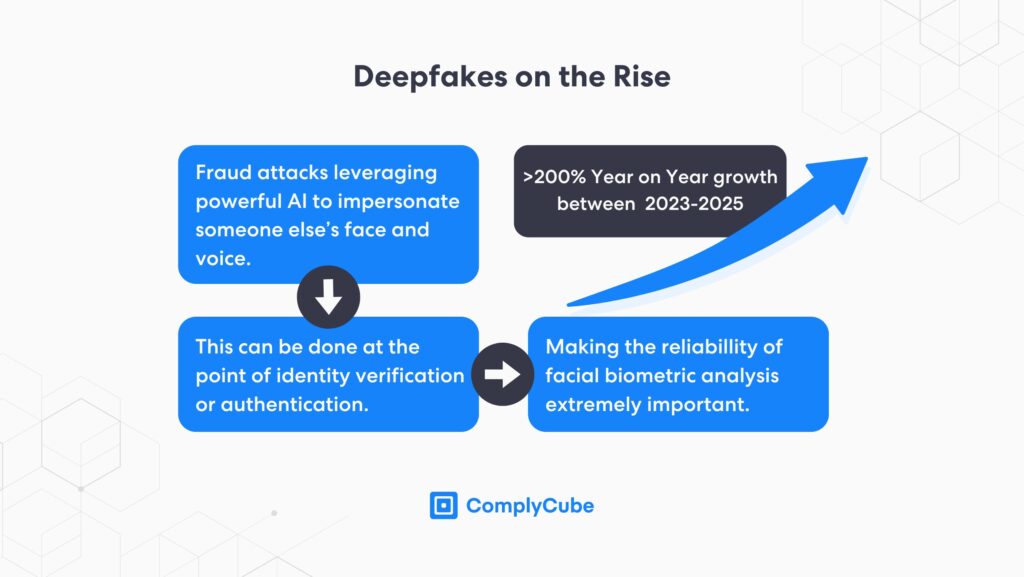

Generative AI poses the biggest threat to the [financial] industry, potentially enabling fraud losses to reach $40bn in the US by 2027, up from $12.3bn in 2023.

This prediction suggests that deepfake fraud could rise by over 200% within four years, emerging as leading driver of global financial crime. To prevent this surge, organisations must invest in advanced deepfake detection software capable of identifying synthetic content before it leads to financial loss.

The Erosion of Trust with Fraudulent Technology

Beyond elections, the proliferation of deepfakes poses a broader threat to public and private trust in information and identity verification. As deepfake technology becomes more prevalent, people are becoming increasingly skeptical of the media they consume. This skepticism in media could lead to a phenomenon known as the liar’s dividend, where the possibility of fake content gives individuals plausible deniability, undermining accountability and truth. IDV solutions could help organisations verify truth from falsehood, restoring digital trust in an age of AI deception.

A common example of this phenomenon is damaging media coverage of a public figure, political leader, or business leader. Skepticism in authentic media allows these individuals to play on this public sentiment, using it to their advantage to claim that it is not genuine. It is easy to see how this effect might snowball.

Case Study: Arup CEO Deepfake Scam and $25 Million Fraud

In early 2025, cybercriminals used generative AI to impersonate senior Arup executives, including the CEO, during a fake video call with a finance employee in the company’s Hong Kong office. Believing the request was legitimate, the employee transferred US $25 million to accounts controlled by the fraudsters.

The deepfake video closely mirrored Arup’s internal communication style and featured multiple synthetic “executives”. Notably, the attackers had studied the firm’s hierarchy and workflows, combining deepfake technology with targeted social engineering. As a result, the employee found no reason to question the interaction. By the time identity verification checks uncovered the fraud, the funds were unrecoverable.

This case underscores how easily deepfakes can bypass traditional verification methods. Therefore, organisations must adopt real-time biometric IDV solutions with real-time biometric verification and deepfake detection software to counter such threats. Ultimately, the Arup incident serves as a clear warning that even global, well-resourced firms remain vulnerable to deepfake-enabled fraud.

Building Trust at Scale with Deepfake Detection Software

While the dangers of deepfakes are well understood, regulatory responses remain fragmented. In the US, nationwide legislation is still lacking, however, at least 20 states have enacted laws targeting election-related deepfakes. A cohesive federal strategy, though, has yet to materialise. Meanwhile, the Department of the Treasury has endorsed automated technologies such as biometric verification and document verification as some of the most effective tools for preventing and combating emerging fraud techniques.

The UK has also seen limited progress. While laws prohibit the creation and distribution of harmful personal deepfakes, broader regulations targeting their use in electoral manipulation have yet to be enacted.

For now, it remains the responsibility of each business to implement IDV solutions that include deepfake detection software as a frontline tool to counter deepfake-enabled fraud. Additionally, organisations must account for deepfake threats within their Risk-Based Approach (RBA) when shaping an Anti-Money Laundering (AML) strategy.

Key Takeaways

- Deepfakes are fueling a surge in identity fraud, bypassing standard IDV solutions.

- Election integrity is increasingly at risk, as synthetic media is used to spread disinformation, manipulate public perception.

- Biometric verification plays a crucial role in combating deepfakes, using facial recognition, liveness detection, and micro-expression analysis.

- AI-powered document verification is essential for detecting forged or synthetic IDs, enabling businesses to verify document authenticity.

- ComplyCube provides a unified platform that integrates identity verification, document verification and deepfake detection software for complete fraud protection.

About ComplyCube

To counter AI-driven fraud, deepfake detection software and IDV solutions have become essential. These tools use generative AI to analyze the same data patterns used to create deepfakes, helping to detect synthetic content and prevent fraud. ComplyCube’s document and biometric verification solutions play a key role in this process, enabling organisations worldwide to authenticate users and block deepfake attempts.

The leading provider of IDV solutions was built to combat the growing threats of innovative fraudulent methodologies in the 21st century. By leveraging advanced AI and machine learning algorithms, ComplyCube’s platform offers comprehensive IDV and biometric verification services, ensuring robust protection against identity fraud and deepfake content.

Boasting a flexible and customizable solution, their services can be tailored according to a firm’s RBA to help businesses enhance their security protocols, maintain compliance with regulatory standards, and build trust with their customers in an increasingly digital world. For more information, reach out to a compliance specialist today.

Frequently Asked Questions

What is a deepfake and how does it work?

A deepfake is a form of synthetic media, such as video, image or audio, created using deep learning algorithms. These AI systems train on large datasets to replicate subtle human traits, including facial expressions and speech patterns. As a result, deepfakes can convincingly mimic real people’s voices, faces, and behaviors. Threat actors frequently use them to spread misinformation, impersonate individuals or commit identity fraud.

How can you tell if a video, audio or image is a deepfake?

Deepfakes can be identified using AI-powered detection tools that analyse for inconsistencies. However, deepfake detection software look for anomalies in facial symmetry, blinking patterns, lighting, or background distortions. In addition, advanced systems may also scan metadata and detect manipulation artifacts invisible to the human eye. While manual detection is unreliable, automated solutions can flag synthetic content with high levels of accuracy.

What is biometric verification and how does it stop deepfakes?

Biometric verification is a type of identity verification that authenticates a person’s identity by analysing unique biological traits. This verification takes into account, most commonly, facial features during a live interaction. It uses liveness detection to ensure the presence of a real human. This prevents spoofing attempts using static images, videos, or AI-generated faces. Since synthetic media struggles to pass these real-time checks, biometric verification remains one of the most effective tools for blocking deepfakes.

How do deepfakes affect elections and democracy?

Deepfakes are increasingly used to spread false political narratives, impersonate candidates, and confuse voters. For example, they can impersonate political candidates, spread false narratives, and erode voter trust. Consequently, this undermines electoral legitimacy and damages the foundations of democratic institutions.Election interference through deepfakes is now a recognised security concern in multiple countries.

What makes ComplyCube effective as a deepfake detection software?

ComplyCube combines AI-powered document verification, biometric authentication, and real-time identity verification to detect deepfake-based fraud. Its liveness detection technology flags synthetic media and stops fraudulent identities before onboarding. Moreover, ComplyCube analyses both documents and facial data for signs of manipulation, ensuring that deepfake threats are neutralised without compromising user experience or compliance. Trusted by global enterprises, ComplyCube ensures deepfake threats are mitigated without compromising user experience or compliance.