Our digital identities have become as crucial as our physical ones, and inequality exists within the digital identity space as much as it does in any part of human life. Gender discrimination can be seen in the lack of identification that women possess compared to men on a global scale, as well as within AI biases due to unequal data input. Women, particularly women of colour, are being left behind in the digital identity revolution, with Identity Verification (IDV) technologies having far higher error-rates for women than men. This isn’t just a tech issue—it’s a human rights crisis with far-reaching consequences.

The Global ID Gap

Imagine being unable to open a bank account, vote, or access healthcare simply because you lack a piece of plastic or a digital code. For a staggering 1 in 4 women worldwide, this is reality. They lack a valid ID, effectively rendering them invisible to many systems and services we take for granted. Without the necessary documentation, these women face many disadvantages, including issues accessing healthcare, education, employment and legal protections.

1 in 4 women worldwide lack a digital ID.

“Without an ID, women are often trapped in a cycle of poverty and dependency,” says Dr. Amina Sayed, a researcher at the World Bank. “It’s not just about a card—it’s about freedom, opportunity, and dignity.”

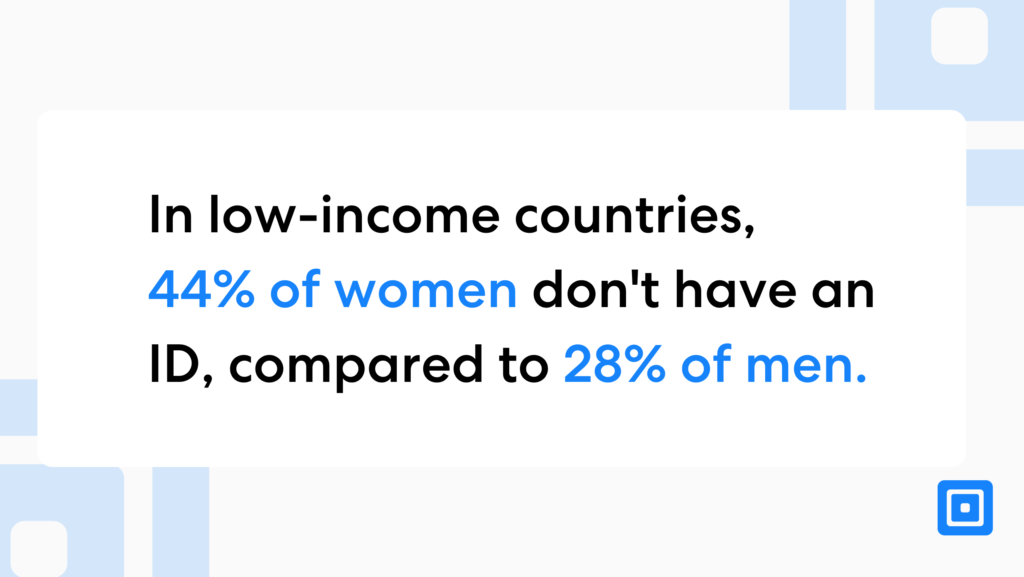

But here’s where it gets even more alarming: the World Bank Group found that in low-income countries, 44% of women don’t have an ID, compared to 28% of men. That’s nearly half of all women in these nations, cut off from full participation in society and the economy.

In low-income countries, 44% of women don’t have an ID, compared to 28% of men.

In 37 countries, married women face more obstacles than married men when applying for passports. In several nations, women also face extra legal challenges that limit their access to digital financial services. For example, married women may be required to present a marriage certificate, adopt their husband’s name, or seek approval from a male family member to obtain identification.

The World Bank’s 2024 Women, Business and the Law report highlights that in 14 countries, women face restrictions that prevent them from traveling outside the home freely. Additionally, women may encounter resistance or lack of support from family members, which can hinder their access to digital identification.

When AI Plays Favourites: The Bias in the Machine

Artificial Intelligence is often thought as an equalizer, as it can eliminate human biases within decision making processes. However, what is occurring is that the data inputs into AI facial recognition systems is unequal, leading to higher error rates for women and people of colour.

These systems misidentify light-skinned men only 0.8% of the time, the error rate skyrockets to 34.7% for darker-skinned women.

While these systems misidentify light-skinned men only 0.8% of the time, the error rate skyrockets to 34.7% for darker-skinned women. That’s not a small gap—it’s a very clear reflection of the inequality present within global societies.

“It’s like these systems were designed with blinders on,” notes AI ethicist Dr. Joy Buolamwini. “They simply don’t ‘see’ a large portion of the world’s population accurately.” The facial recognition bias proves that women are at a disadvantage even in western societies, where facial biometrics still possess a higher error rate for women than for men.

Women of Colour Highly Disadvantaged

For women of colour, these issues create a perfect storm of exclusion. They’re more likely to lack traditional IDs and more likely to be misidentified by AI systems when they do interact with digital ID platforms. A 2019 test by the Federal US Government concluded the technology works best on middle-age white men. The accuracy rates weren’t impressive for people of colour, women, children, and elderly individuals.

We’re seeing 21st-century technology amplify 20th-century biases.

“We’re seeing 21st-century technology amplify 20th-century biases,” warns civil rights attorney Maya Johnson. “It’s digital redlining, pure and simple.” Inequality within facial verification technology occurs is as these systems are trained on datasets with more male faces, particularly white male faces, leading to better accuracy for these groups. This happens because many early datasets were created by predominantly male researchers or sourced from data with limited diversity. As a result, women, especially women of colour, are underrepresented, causing the system to perform poorly for them and leading to misidentification or false negatives.

Bridging the Gap: A Call to Action

So, what can be done? Experts agree that a multi-pronged approach is needed:

- Social Initiatives: Programs to help women obtain traditional IDs, especially in rural and low-income areas.

- Legal Reform: Challenging discriminatory laws that make it harder for women to obtain IDs.

- AI Overhaul: Diversifying AI development teams and training data to create more inclusive systems.

- Accountability: Implementing strict oversight and testing of AI systems for bias.

“This is a solvable problem,” insists tech entrepreneur Aisha Kahn. “But it requires acknowledging the issue and committing resources to fix it. We can’t afford to leave half the world’s population behind in the digital age.”

As our future becomes increasingly digital, it’s important that every platform offering facial recognition technology does their part to ensure the use of ethical, unbiased AI. At ComplyCube, ethical AI considerations have been central to our development process. We’ve implemented AI/ML systems with built-in bias-sensitive drift detection to ensure the models maintain fairness across diverse demographic groups. Our independent validation processes ensure near-uniform performance across all groups, and we continue to refine our systems to reduce bias and enhance accuracy.

For more information on partnering with an ethical AI focused IDV partner, get in touch with one of our compliance experts.