Global politics are hinged on shifting narratives that are rewritten by millions of people each day, as our opinions are reshaped by the last thing we read on Twitter. When what we’re reading are real people’s views from all walks of life, then we are certainly reaping the benefits of freedom of speech, a clearly democratic use of technology. However, when what we’re reading is the consequence of a lack of needed identity checks and fraudulent bots on social media, it’s anything but democratic – it’s an attempt to control a space that should be ours. The lack of social media verification practices across online platforms has enabled bad actors to steer global discussions, often spreading false information to achieve a specific political agenda. The implementation of fake social media account detection has, therefore, become critical.

Fake accounts and bots have been closely tied to international politics for a long time, causing worldwide controversy for the past decade. Hundreds, if not thousands, of news articles, have reported on increased bot activity on social platforms ahead of elections. Ahead of the 2024 UK elections, articles such as Global Witness’s piece, “Investigation reveals content posted by bot-like accounts on X has been seen 150 million times ahead of the UK elections”, highlighted how opinions held by the general public would not be the sole deciding factor.

Interestingly, it’s not only media platforms that have spoken out about bot-driven political traffic, but academics have also taken this matter into their own hands over the past 20 years. In the early discussions of malicious bot software, T. Holz wrote a critical piece in 2005, “A Short Visit to the Bot Zoo.” This was one of the first pieces to address bot attacks online, which at the time were primarily used for mass identity theft, distributed denial-of-service (DDoS) attacks, or sending spam.

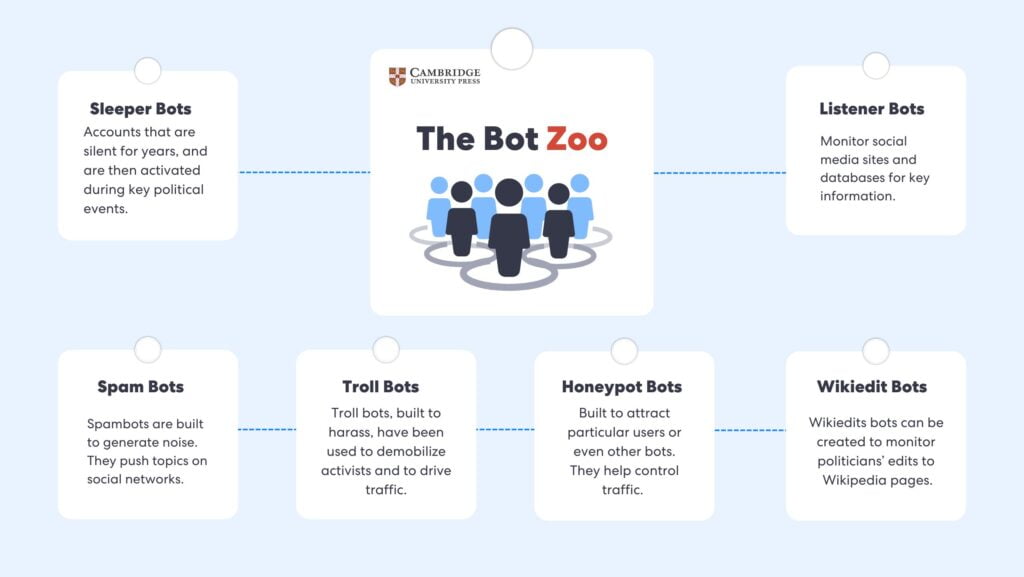

Holz managed to capture the key characteristics of these bots, outlining their traits and behaviors. He also created a structure that could categorize different kinds of bots. Cambridge academics took Holz’s work in 2020, as well as many other leading papers and studies on bot behavior since 2005, and similarly created their own classification, focusing specifically on the online activity of political bots.

A Zoo of Political Bots

Academics at Cambridge University Press have written extensively on the use and threats of political bots. Samuel C. Woolley was able to make real strides in his 2020 paper, “Bots and Computational Propaganda: Automation for Communication and Control.” He states in this paper that “The presence [of] social bots in online political discussion can create three tangible issues: first, influence can be redistributed across suspicious accounts that may be operated with malicious purposes; second, the political conversation can become further polarized; third, the spreading of misinformation and unverified information can be enhanced.”

He goes on to replicate Holz’s original Bot-Zoo classification but applies the concept to political bots, identifying the following categories:

- Listener Bots: Can monitor social media sites and databases for key information but also track and communicate what they find.

- Spambots: Spambots, conversely, are built to generate noise.

- Wikiedit bots: Wikiedit bots can be created to monitor politicians’ edits to Wikipedia pages.

- Sleeper bots: Sleeper bots are social media accounts that sit on a site like Twitter, all but unused for years, to generate a more realistic online presence. They are then activated during key political events.

- Troll bots: Troll bots, built to harass, have been used to troll activists trying to organize and communicate on Twitter but can also drive traffic from one cause, product, or idea to another.

- Honeypot bots: Built to attract particular users or even other bots.

Modern-Day Politics

Modern politics have become increasingly intertwined with social media platforms, where the battle for public opinion is often influenced by malicious actors rather than genuine discourse. The proliferation of bots and fake accounts has shifted the landscape of political engagement, allowing for misinformation to spread at unprecedented speeds.

The Chinese Spy Baloon Incident (2024)

One example of this is a case that took place in early 2024, in which armies of bots battled on social media over the Chinese spy balloon incident. Researchers from Carnegie Mellon University examined 1.2 million tweets related to the incident, finding some interesting results.

The Chinese tweets were mainly artificial, with 64% of the tweets written by bots. However, the US also used bots to try and shift the blame, with 35% of their tweets coming from bots. This case highlights that much of the content we encounter daily on platforms like Twitter or Facebook may not be trustworthy, as it’s becoming harder to distinguish between real and fabricated information.

Brexit Referendum (2016)

Dr. Marco Bastos uncovered a network of social media bots that were used to saturate Twitter with electoral messages during the 2016 Brexit referendum campaign. The research found that 13,493 accounts tweeted within the two weeks before and after the referendum.

These false online identities voiced opinions and attempted to manipulate public opinion through rapid cascade tweets.

Unsurprisingly, these profiles disappeared after the voting was over. City University’s research on this case states that “these false online identities voiced opinions and attempted to manipulate public opinion through rapid cascade tweets.”

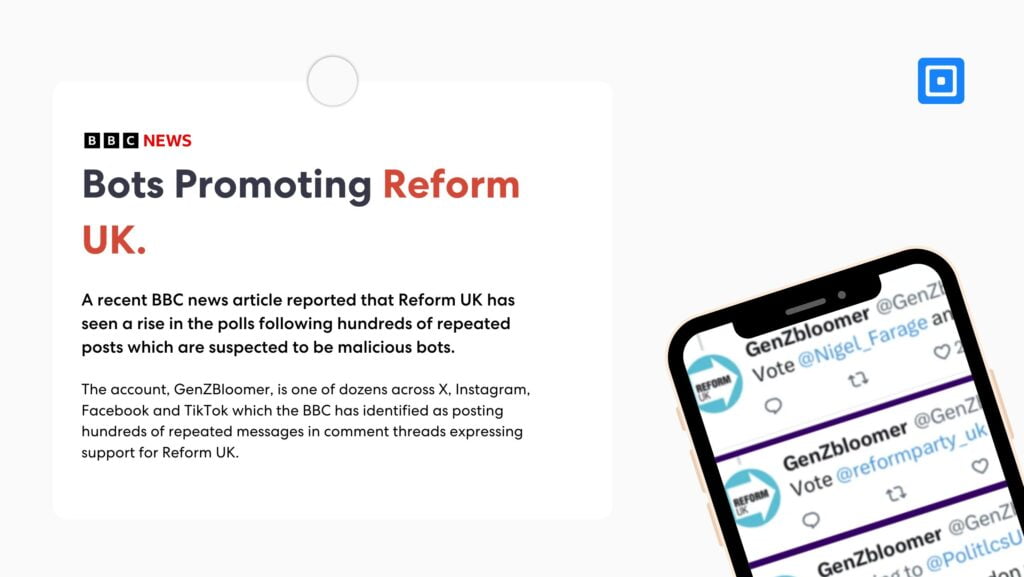

Bots Promoting Reform UK (2024)

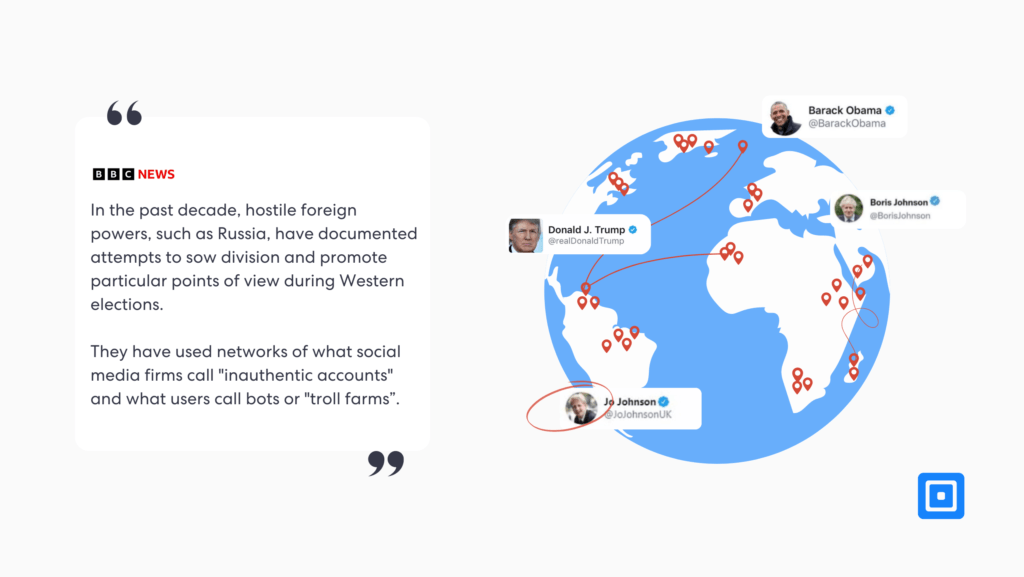

A recent BBC news article reported that Reform UK has seen a rise in the polls following repeated posts that are suspected to have been engineered by malicious bots. The BBC decided to look into this further, getting in touch with the people behind accounts clearly trying to exaggerate Reform UK’s popularity. Following concerns about foreign interference in other elections, the investigation concluded that many of these accounts were inauthentic.

The BBC article states, “In the past decade, hostile foreign powers, such as Russia, have documented attempts to sow division and promote particular points of view during Western elections. They have used networks of what social media firms call ‘inauthentic accounts’ and what users call bots or ‘troll farms.’”

Researchers have catalogued political bot use in massively bolstering the social media metrics of politicians and political candidates from Donald Trump to Rodrigo Duterte.

It’s difficult to identify the extent of influence achieved by these forms of manipulation, yet many journalists and academics have come to the conclusion that it is certainly meaningful. Academics at Cambridge University Press report that “researchers have cataloged political bot use in massively bolstering the social media metrics of politicians and political candidates from Donald Trump to Rodrigo Duterte.”

Getting the Situation Under Control

What needs to be considered is whether online platforms are doing enough to curb the spread of malicious bot-driven content. In particular, election periods seem to trigger an increase in such activity, pointing to a lack of robust identity verification systems.

Many social media platforms seem to try to address the problem by removing fake accounts. In the last quarter of 2023, Facebook removed nearly 700 million fake social media accounts after removing 827 million in the previous quarter. Yet, no matter how many suspicious users are removed, more undoubtedly reappear. The problem clearly lies with the sign-up process.

More stringent measures, such as identity verification, may help drastically reduce the number of these fraudulent accounts. Ultimately, ensuring the integrity of political discussions online will require a collaborative effort between governments, tech companies, and users themselves.

Next Steps for Safer Platforms

Identity Verification (IDV) software provides a secure solution to fight bot-driven traffic online by enforcing strict user authentication and identity verification protocols. These platforms can implement several key measures:

Document Checks: These checks verify that the presented identity is authentic and valid by examining official documents such as passports to confirm their legitimacy.

Biometric Verification: Biometric checks go a step further by analyzing subtle facial micro-expressions to ensure the person presenting the document is the rightful owner. Liveness detection technology also verifies that the user is physically present and not a bot or recording.

Age Verification: Safeguarding minors is crucial for creating safer online environments. Age verification helps ensure that users meet age requirements, reducing the risk of exploitation or exposure to harmful content.

By integrating these techniques, IDV platforms can significantly reduce bot activity, safeguarding social media spaces from misuse. These measures ensure that only real users create accounts, curbing the spread of spam, misinformation, and other malicious activities.

For more information on how to safeguard your online platform from bot-driven fraud, contact our expert compliance team.