The Online Safety Bill (OSB) and the UK Online Safety Act (OSA) are two sides of the same coin. The former laid the legislative groundwork for the latter’s online identity verification and age assurance policies. This guide informs readers of the Act’s significance and why a sophisticated age verification system must be established to safeguard the online world.

What is the UK Online Safety Bill?

The UK Online Safety Bill was a parliamentary brief that opened the discussions on the Act’s contents. The discussions were to confirm how harmful content should be regulated online, including content pertaining to minors and explicit material, and establish adequate safeguards to protect all internet users, particularly the young.

The Bill’s discussions also established regulatory expectations and the key regulating bodies that would enforce the decisions. The OSB opened talks around implementing age verification methods as a barrier to age-gated content and user empowerment tools where owners of online accounts could set their own safeguards.

What is the UK Online Safety Act?

The UK OSA finalized these discussions into enforceable law. The Act details the responsibilities of major regulatory bodies and the institutions that must adhere to their policies, collaborating with Ofcom as the lead regulatory force for the digital UK.

What are the Key Principles of the Online Safety Act?

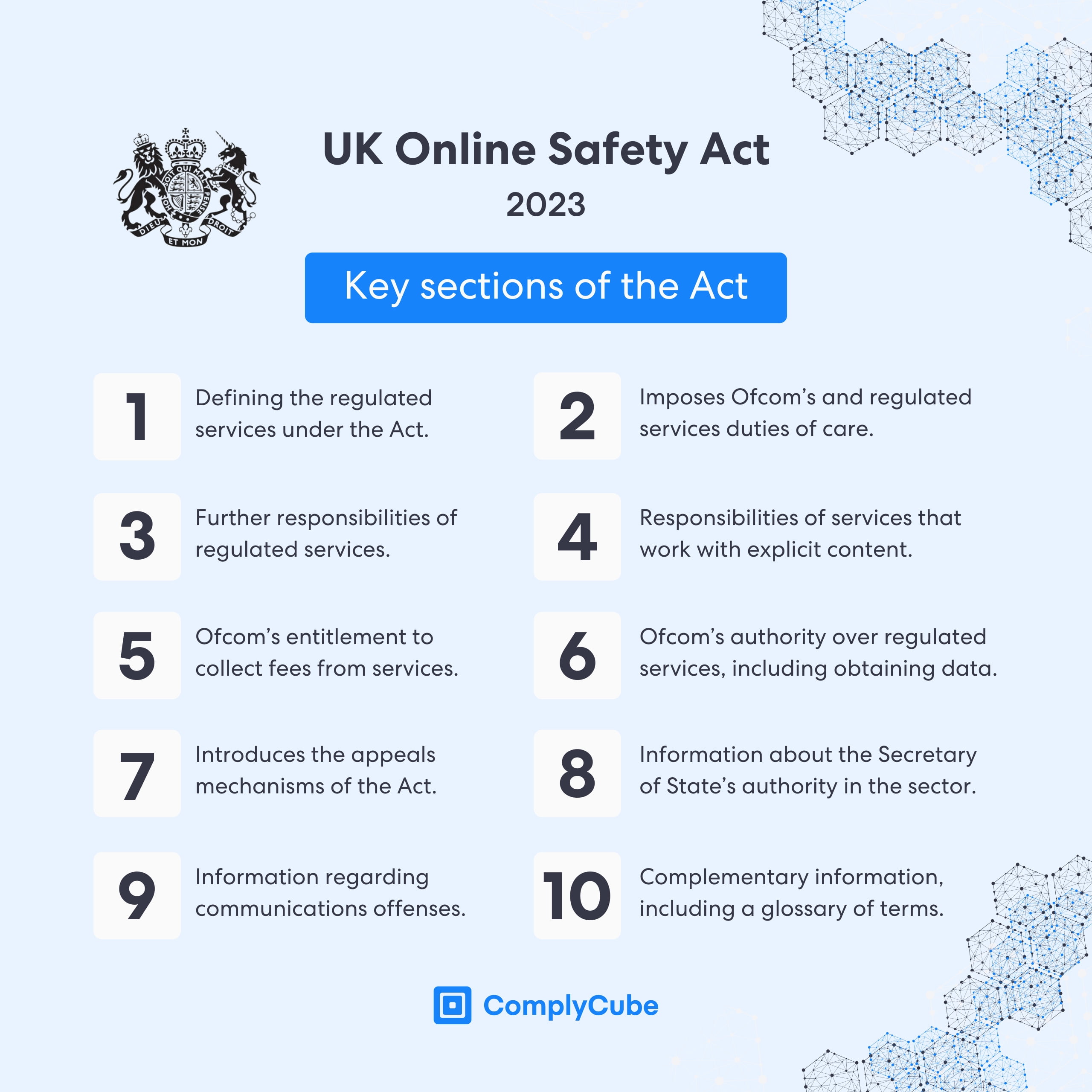

The Act is broken down into 11 key parts (12 including an introduction.) They are:

- The key definitions relevant to the Act include user-to-user services, search services, Part 3 services, and regulated services.

- The impositions of duties of care on the providers to user-to-user services and search services and Ofcom’s responsibility of issuing codes of practice.

- Further impositions of the duties of user-to-user and search services.

- The imposition of duties on providers of internet services that publish explicit content, including user-to-user and search services.

- Introduces the policy where Ofcom collects fees from providers of regulated services.

- Explains Ofcom’s authority and obligations in relation to regulated services, including the power to obtain information from service providers.

- Clarifies the appeals and complaints policy in relation to regulated services.

- Reveals the new power the Secretary of State has over the industry, courtesy of this Act.

- Contains communications offenses.

- The final two sections contain supplementary information, including an index.

Definitions of the UK Online Safety Act

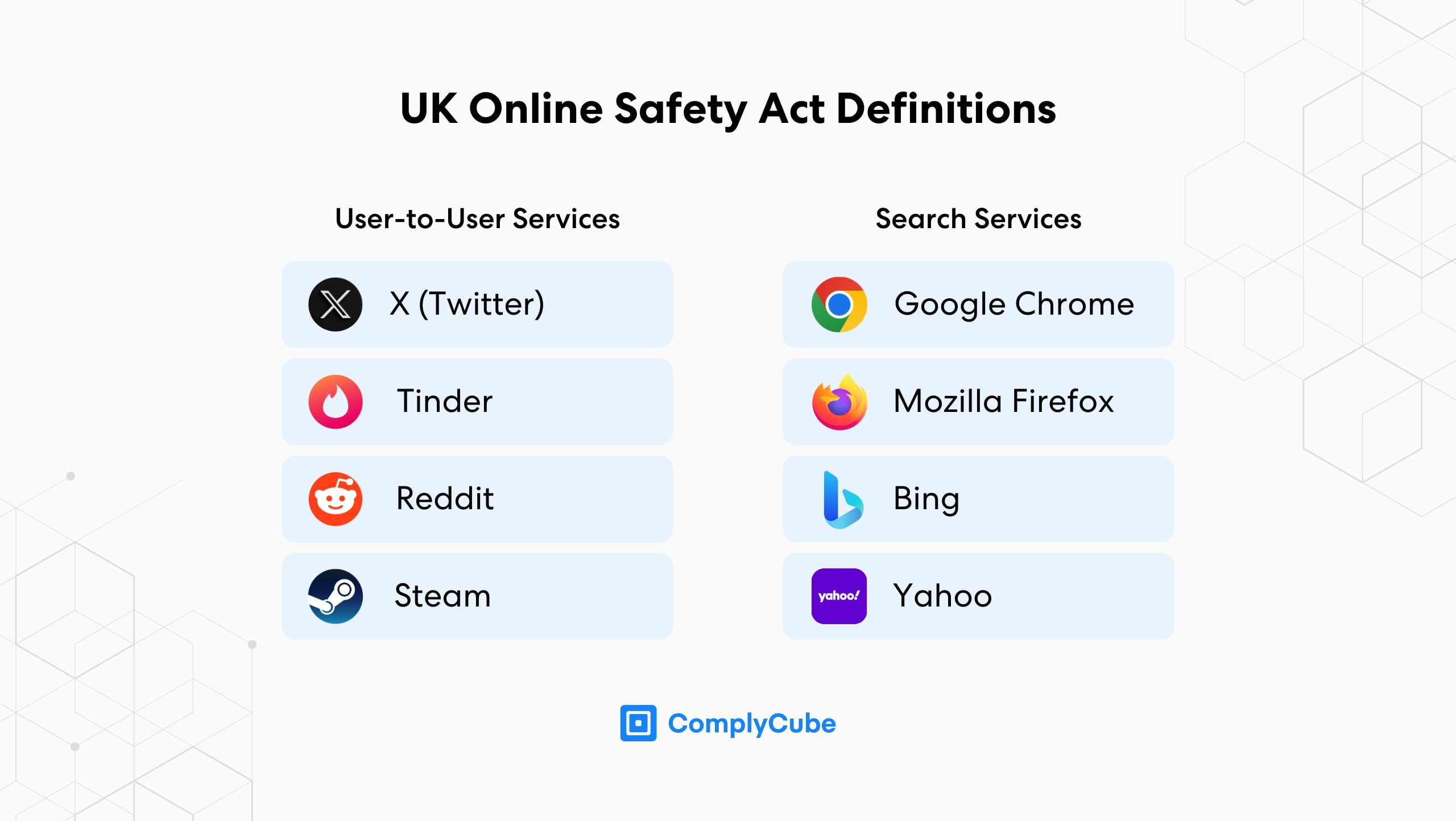

The Online Safety Act applies to applicable companies that provide two key regulated services: user-to-user services and search services.

User-to-User Servicers

If a platform facilitates the creation and sharing of user-generated content that can be accessed by other users, it is subject to the UK Online Safety Act. This includes a wide range of user-to-user services such as:

- Social media platforms, such as X (Twitter)

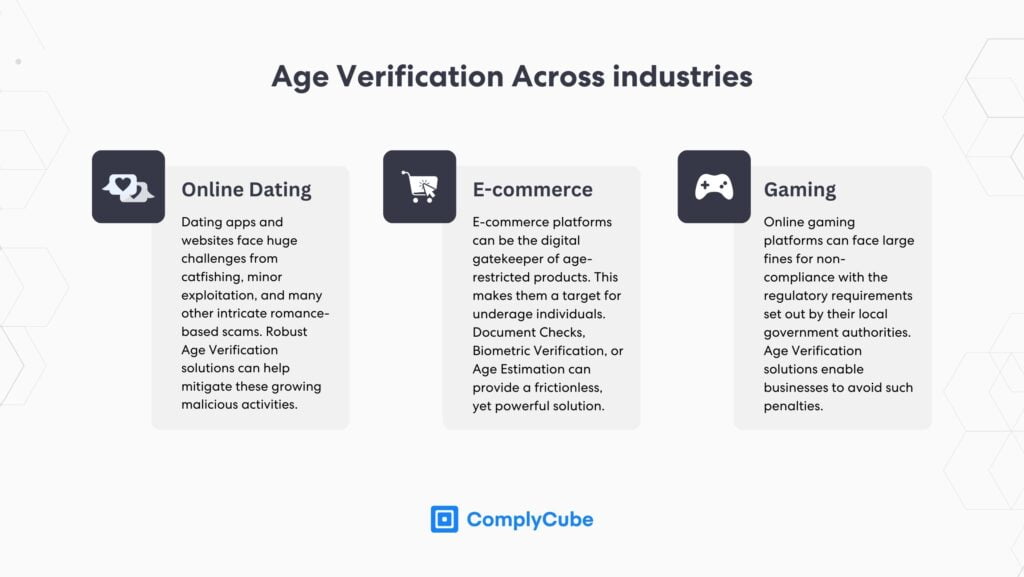

- Online dating sites, such as Tinder

- Discussion forums, such as Reddit

- Online gaming providers, such as Steam

- Adult/Explicit websites

Search Services

Any online business that provides a search engine or a means to search things on the internet is defined as a search service. However, the criteria for what constitutes a regulated search engine are nuanced. According to the Act, any search engine that allows users to search multiple websites or databases (essentially all websites or databases) falls under the law’s jurisdiction.

In contrast, search engines that only allow searches within a single website or database are not regulated by this law. Typical regulated search services are:

- Google Chrome

- Mozilla Firefox

- Bing

- Yahoo

User Verification: What Does the Act Suggest?

Although the Act has not issued the exact age verification specification yet, it is strongly advised that firms operating in this industry begin integrating with an Identity Verification (IDV) and age verification service. This will ensure that they are best prepared for the demands when they are released and can remain competitive.

Kids Online Safety

In the UK, data privacy regulations stipulate that users must be at least 13 years old to join a social media platform without parental consent. The Online Safety Act enhances these protections. Online platforms must implement mechanisms to estimate or verify a user’s age to prevent children from accessing inappropriate content.

Over 80% of children have experienced harmful content online.

This figure displays a horrifying truth about current online internet safety. While ‘harmful content’ is left open to interpretation, it is clear that current measures are not satisfactory, and the UK Online Safety Act is a pivotal step in creating stronger safeguards.

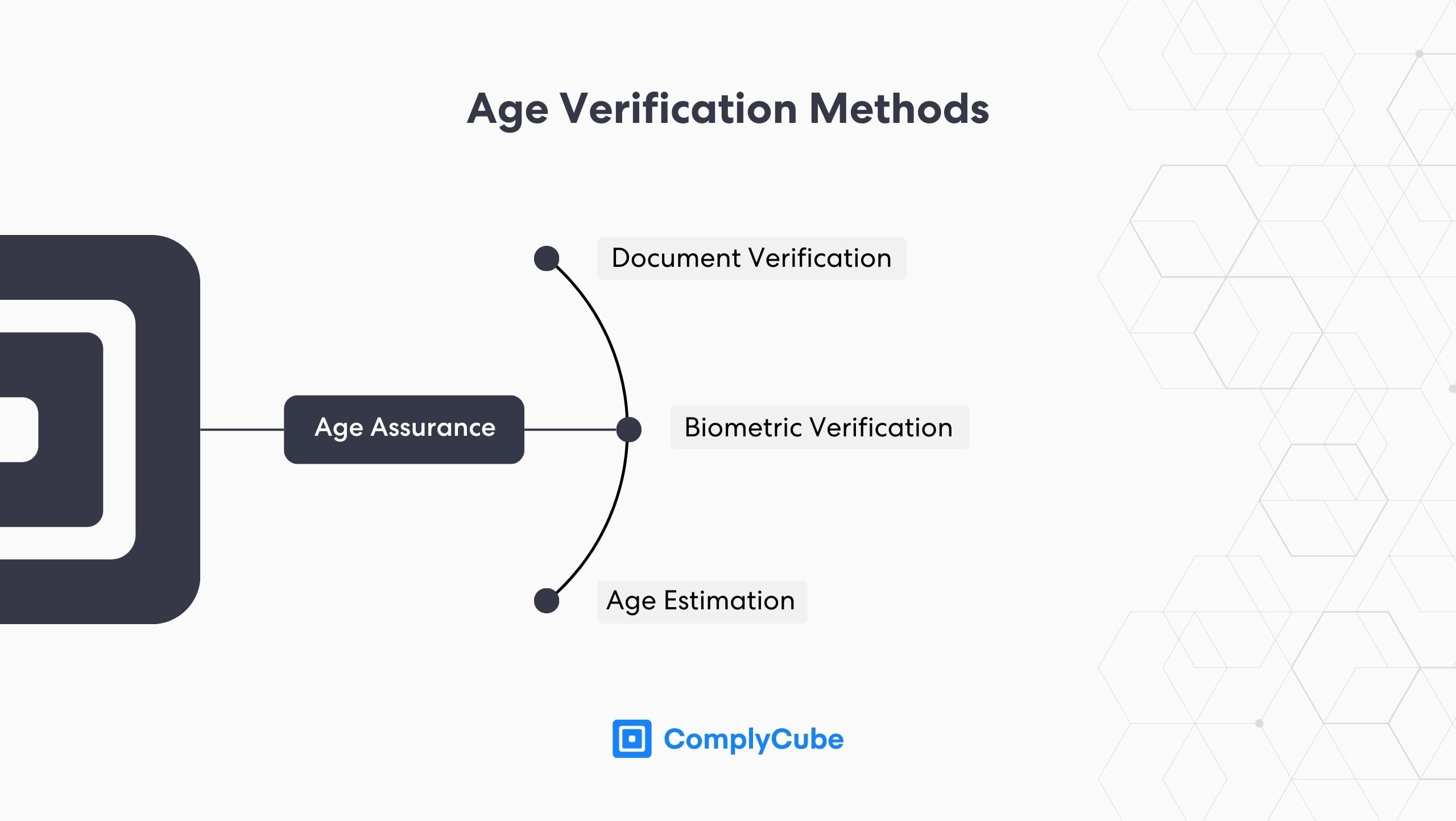

However, the OSA does not immediately specify any methods for achieving age assurance. Instead, Ofcom was given the responsibility of developing these regulations. As per the draft guidance published in May 2024, age assurance processes must be technically accurate, robust, reliable, and fair.

Acceptable methods include photo-ID matching, facial age estimation, and reusable digital identity services. Conversely, methods like self-declaration of age and general contractual age restrictions are deemed ineffective. However, digital IDV services are not currently enforced. For more information about achieving age assurance, read Picking Digital Identity Verification Solutions.

Ofcom’s 2024 Spring update to the Act included 3 key takeaways.

- Regulated firms must introduce robust age verification methods to ensure they know which of their users are children.

- Institutions must implement and develop safer algorithms. This includes personalizing algorithms, so if children do not like what they see, they can let the app or website know.

- Ofcom stated that moderation must be ramped up and its efficacy increased. This includes swift action on harmful content and the enablement of safe search modes.

UK Online Safety Act for Adults

Businesses providing a user-to-user service must give adults the option to verify their identity. This is to give adult users who have verified their identity to filter out those who have not. However, this policy is currently mild in its effectiveness.

Most online platforms that pursue this policy still lack adequate age verification safeguards and, therefore, still allow children to access their services. For this reason, age verification systems upon signup are the most pivotal application of the Act, yet remain relatively poor in adoption.

Although the Bill does not prescribe the exact nature of this filtering process, it does require that it effectively:

- Prevents unverified users from interacting with content shared or generated by verified users who have opted to filter out unverified users.

- Reduces the likelihood that verified users will encounter content from non-verified users.

The UK Online Safety Act: at a Glance

While the Act does not wish to restrict free speech, a balance must be struck between permitting harmful content online and users’ ability to create or write anything they wish. For this reason, the UK government decided the Act was a necessity for private companies, large entities, and public institutions that provide digital services.

The British government, along with the European Union (EU) in its Digital Services Act (DSA), is among the first movers to implement a comprehensive framework for the protection of minors from harmful and adult content as well as regulate the protective measures on age-restricted products.

The Act also defines safeguarding minors as tracking and preventing child sexual abuse material. This involves monitoring messages and content irrespective of the technology social media platforms use, including end-to-end encryption.

Many of the regulations defined by the Act are also witnessed in other key online regulators, including the Electronic Frontier Foundation (EFF), the Children’s Online Privacy Protection Act (COPPA), among many others. All of which champion similar digital rights, particularly to the safeguarding of minors from adult websites, content, and sexual abuse.

About ComplyCube’s Identity Verification Solutions

ComplyCube is a global leader in Know Your Customer (KYC) and Anti-Money Laundering (AML) products, including a comprehensive suite of IDV solutions to encompass digital firms’ regulatory obligations.

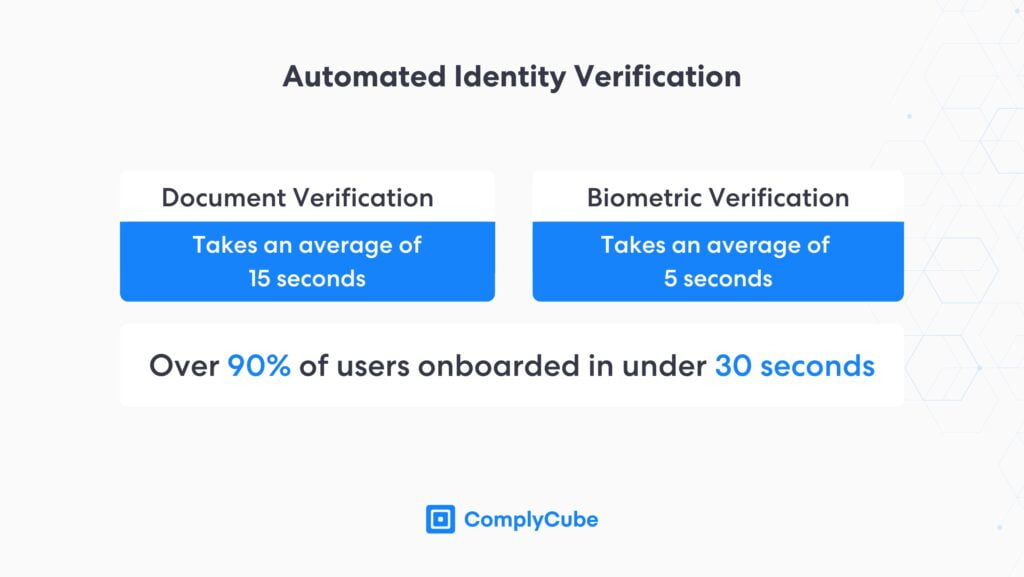

Boasting smart document verification technology, ComplyCube captures user information such as birth date, ID number, expiry and issuance dates, and many other crucial data and security points. This typically forms half of the IDV process, followed by biometric verification, which enhances the identification result.

Biometric verification matches the image in the ID to a selfie or video taken during the acquisition process. Such a pairing creates an extremely high Level of Assurance (LoA) in a client’s real identity. ComplyCube has designed these processes to be as frictionless as possible, and both can be completed together in one seamless workflow in less than 30 seconds.

However, in some instances, firms do not require the same LoA in client identity. On these occasions, ComplyCube’s age estimation technology offers an even more streamlined UX and can accurately estimate a user’s age from a selfie only.

This works well when businesses require an age-gating system but do not want users to give their personal information or create an unnecessarily time-consuming acquisition process. Age estimation can be completed accurately in 5 seconds.

Integrate with an Age Verification System Today

Contact a ComplyCube specialist today for more information on how the UK Online Safety Act affects your business or to learn more about integrating with ComplyCube’s digital identity and age verification system.